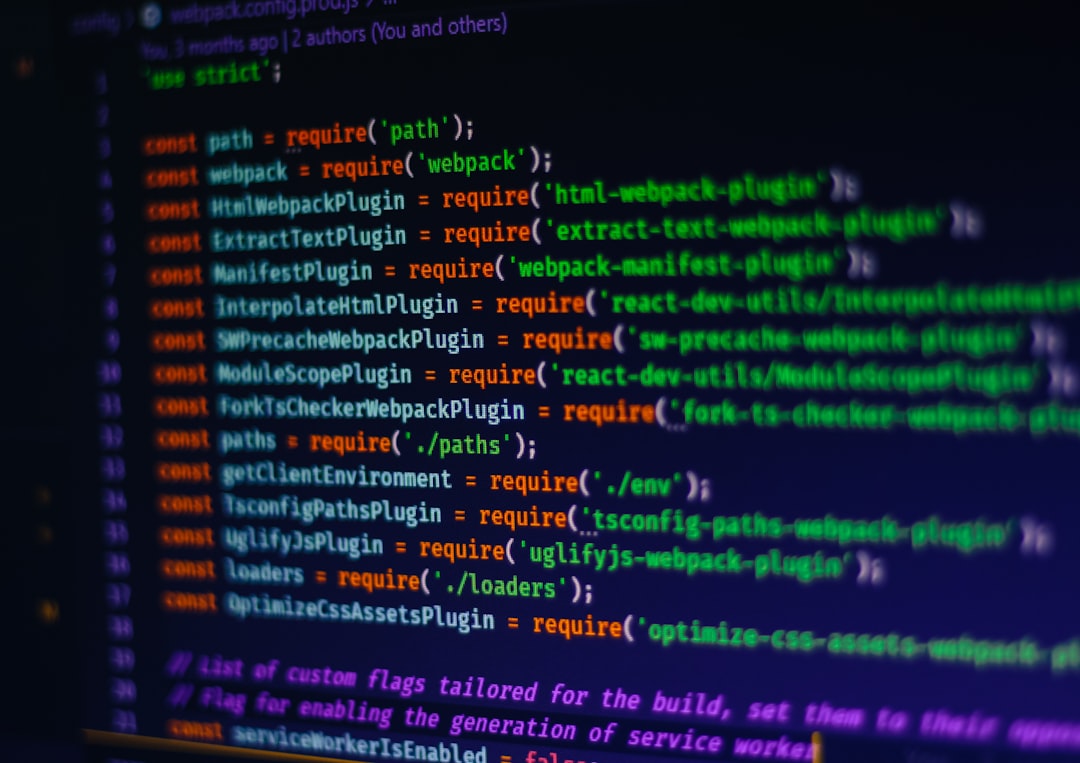

Three weeks ago I reviewed a pull request from a junior developer on our team. The code was clean—suspiciously clean. Good variable names, proper error handling, even JSDoc comments. I approved it, deployed it, and moved on.

Then our SAST scanner flagged it. Hardcoded API keys in a utility function. An SQL query built with string concatenation buried inside a helper. A JWT validation that checked the signature but never verified the expiration. All wrapped in beautiful, well-commented code that looked like it was written by someone who knew what they were doing.

“Oh yeah,” the junior said when I asked about it. “I vibed that whole module.”

Welcome to 2026, where “vibe coding” isn’t just a meme—it’s Collins Dictionary’s Word of the Year for 2025, and it’s fundamentally reshaping how we think about software security.

What Exactly Is Vibe Coding?

The term was coined by Andrej Karpathy, co-founder of OpenAI and former AI lead at Tesla, in February 2025. His definition was refreshingly honest:

Karpathy’s original description: “You fully give in to the vibes, embrace exponentials, and forget that the code even exists. I ‘Accept All’ always, I don’t read the diffs anymore. When I get error messages I just copy paste them in with no comment.”

That’s the key distinction. Using an LLM to help write code while reviewing every line? That’s AI-assisted development. Accepting whatever the model generates without understanding it? That’s vibe coding. As Simon Willison put it: “If an LLM wrote every line of your code, but you’ve reviewed, tested, and understood it all, that’s not vibe coding.”

And look, I get the appeal. I’ve used Claude Code and Cursor extensively—I wrote about my Claude Code experience recently. These tools are genuinely powerful. But there’s a massive difference between using AI as a force multiplier and blindly accepting generated code into production.

The Security Numbers Are Terrifying

Let me throw some stats at you that should make any security engineer lose sleep:

In December 2025, CodeRabbit analyzed 470 open-source GitHub pull requests and found that AI co-authored code contained 2.74x more security vulnerabilities than human-written code. Not 10% more. Not even double. Nearly triple.

The same study found 1.7x more “major” issues overall, including logic errors, incorrect dependencies, flawed control flow, and misconfigurations that were 75% more common in AI-generated code.

And then there’s the Lovable incident. In May 2025, security researchers discovered that 170 out of 1,645 web applications built with the vibe coding platform Lovable had vulnerabilities that exposed personal information to anyone on the internet. That’s a 10% critical vulnerability rate right out of the box.

The real danger: AI-generated code doesn’t look broken. It looks polished, well-structured, and professional. It passes the eyeball test. But underneath those clean variable names, it’s often riddled with security flaws that would make a penetration tester weep with joy.

The Top 5 Security Nightmares I’ve Found in Vibed Code

After spending the last several months auditing code across different teams, I’ve built up a depressingly predictable list of security issues that LLMs keep introducing. Here are the greatest hits:

1. The “Almost Right” Authentication

LLMs love generating auth code that’s 90% correct. JWT validation that checks the signature but skips expiration. OAuth flows that don’t validate the state parameter. Session management that uses predictable tokens.

# Vibed code that looks fine but is dangerously broken

def verify_token(token: str) -> dict:

try:

payload = jwt.decode(

token,

SECRET_KEY,

algorithms=["HS256"],

# Missing: options={"verify_exp": True}

# Missing: audience verification

# Missing: issuer verification

)

return payload

except jwt.InvalidTokenError:

raise HTTPException(status_code=401)

This code will pass every code review from someone who doesn’t specialize in auth. It decodes the JWT, checks the algorithm, handles the error. But it’s missing critical validation that an attacker will find in about five minutes.

2. SQL Injection Wearing a Disguise

Modern LLMs know they should use parameterized queries. So they do—most of the time. But they’ll sneak in string formatting for table names, column names, or ORDER BY clauses where parameterization doesn’t work, and they won’t add any sanitization.

# The LLM used parameterized queries... except where it didn't

async def get_user_data(user_id: int, sort_by: str):

query = f"SELECT * FROM users WHERE id = $1 ORDER BY {sort_by}" # 💀

return await db.fetch(query, user_id)

3. Secrets Hiding in Plain Sight

LLMs are trained on millions of code examples that include hardcoded credentials, API keys, and connection strings. When they generate code for you, they often follow the same patterns—embedding secrets directly in configuration files, environment setup scripts, or even in application code with a comment saying “TODO: move to env vars.”

4. Overly Permissive CORS

Almost every vibed web application I’ve audited has Access-Control-Allow-Origin: * in production. LLMs default to maximum permissiveness because it “works” and doesn’t generate errors during development.

5. Missing Input Validation Everywhere

LLMs generate the happy path beautifully. Form handling, data processing, API endpoints—all functional. But edge cases? Malicious input? File upload validation? These get skipped or half-implemented with alarming consistency.

Why LLMs Are Structurally Bad at Security

This isn’t just about current limitations that will get fixed in the next model version. There are structural reasons why LLMs struggle with security:

They’re trained on average code. The internet is full of tutorials, Stack Overflow answers, and GitHub repos with terrible security practices. LLMs absorb all of it. They generate code that reflects the statistical average of what exists online—and the average is not secure.

Security is about absence, not presence. Good security means ensuring that bad things don’t happen. But LLMs are optimized to generate code that does things—that fulfills functional requirements. They’re great at building features, terrible at preventing attacks.

Context windows aren’t threat models. A security engineer reviews code with a mental model of the entire attack surface. “If this endpoint is public, and that database stores PII, then we need rate limiting, input validation, and encryption at rest.” LLMs see a prompt and generate code. They don’t think about the attacker who’ll be probing your API at 3 AM.

Security insight: The METR study from July 2025 found that experienced open-source developers were actually 19% slower when using AI coding tools—despite believing they were 20% faster. The perceived productivity gain is often an illusion, especially when you factor in the time spent fixing security issues downstream.

How to Vibe Code Without Getting Owned

I’m not going to tell you to stop using AI coding tools. That ship has sailed—even Linus Torvalds vibe coded a Python tool in January 2026. But if you’re going to let the vibes flow, at least put up some guardrails:

1. SAST Before Every Merge

Run static analysis on every single pull request. Tools like Semgrep, Snyk, or SonarQube will catch the low-hanging fruit that LLMs routinely miss. Make it a hard gate—no green CI, no merge.

# GitHub Actions / Gitea workflow - non-negotiable

- name: Security Scan

run: |

semgrep --config=p/security-audit --config=p/owasp-top-ten .

if [ $? -ne 0 ]; then

echo "❌ Security issues found. Fix before merging."

exit 1

fi

2. Never Vibe Your Auth Layer

Authentication, authorization, session management, crypto—these are the modules where a single bug means game over. Write these by hand, or at minimum, review every single line the AI generates against OWASP guidelines. Better yet, use battle-tested libraries like python-jose, passport.js, or Spring Security instead of letting an LLM roll its own.

3. Treat AI Output Like Untrusted Input

This is the mindset shift that will save you. You wouldn’t take user input and shove it directly into a SQL query (I hope). Apply the same paranoia to AI-generated code. Review it. Test it. Question it. The LLM is not your senior engineer—it’s an extremely fast intern who read a lot of Stack Overflow.

4. Set Up Dependency Scanning

LLMs love pulling in packages. Sometimes those packages are outdated, unmaintained, or have known CVEs. Run npm audit, pip-audit, or trivy as part of your CI pipeline. I’ve seen vibed code pull in packages that were deprecated two years ago.

5. Deploy with Least Privilege

Assume the vibed code has vulnerabilities (it probably does). Design your infrastructure so that when—not if—something gets exploited, the blast radius is limited. Principle of least privilege isn’t new advice, but it’s never been more important.

Pro tip: Create a SECURITY.md in every repo and include it in your AI tool’s context. Define your auth patterns, banned functions, and security requirements. Some AI tools like Claude Code actually read these files and follow the patterns—but only if you tell them to.

The Open Source Problem Nobody’s Talking About

A January 2026 paper titled “Vibe Coding Kills Open Source” raised an alarming point that’s been bothering me too. When everyone vibe codes, LLMs gravitate toward the same large, well-known libraries. Smaller, potentially better alternatives get starved of attention. Nobody files bug reports because they don’t understand the code well enough to identify issues. Nobody contributes patches because they didn’t write the integration code themselves.

The open-source ecosystem runs on human engagement—people who use a library, understand it, find bugs, and contribute back. Vibe coding short-circuits that entire feedback loop. We’re essentially strip-mining the open-source commons without replanting anything.

Gear That Actually Helps

If you’re going to do AI-assisted development (the responsible kind, not the full-send vibe coding kind), invest in tools that keep you honest:

- 📘 The Web Application Hacker’s Handbook — Still the gold standard for understanding how web apps get exploited. Read it before you let an AI write your next API. ($35-45)

- 📘 Threat Modeling: Designing for Security — Learn to think like an attacker. No LLM can do this for you. ($35-45)

- 🔐 YubiKey 5 NFC — Hardware security key for SSH, GPG, and MFA. Because vibed code might leak your credentials, so at least make them useless without physical access. ($45-55)

- 📘 Zero Trust Networks — Build infrastructure that assumes breach. Essential reading when your codebase is partially written by a statistical model. ($40-50)

Key Takeaways

Vibe coding is here to stay. The productivity gains are real, the convenience is undeniable, and fighting it is like fighting the tide. But as someone who’s spent 12 years in security, I’m begging you: don’t vibe your way into a breach.

- AI-generated code has 2.74x more security vulnerabilities than human-written code

- Never vibe code authentication, authorization, or crypto—write these by hand or use proven libraries

- Run SAST on every PR—make security scanning a merge gate, not an afterthought

- Treat AI output like untrusted input—review, test, and question everything

- The productivity perception is often wrong—studies show devs are actually 19% slower with AI tools on complex tasks

Use AI as a force multiplier, not a replacement for understanding. The vibes are good until your database shows up on Have I Been Pwned.

Have you had security scares from vibed code? I’d love to hear your war stories—drop a comment below or reach out on social.

📚 Related Articles

- Claude Code Changed How I Ship Code — Here’s My Honest Take After 3 Months

- Mastering Incident Response Playbooks for Developers

- Ultimate Guide to Secure Remote Access for Your Homelab

Some links in this article are affiliate links. If you buy something through these links, I may earn a small commission at no extra cost to you. I only recommend products I actually use or have thoroughly researched.